Move over, woodwind and strings – in the future, the ultimate musical instrument could be the human brain.

Artist Luciana Haill uses medical electroencephalogram, or EEG, monitors embedded in a Bluetooth-enabled sweatband to record the activity of her frontal lobes, then beams the data to a computer that plays it back as song.

Now Haill is taking her gig on the road, joining 30 experimental artists this week to showcase creative and wacky new audio technologies on the Future of Sound tour of England. Audience members will be asked to don the electrodes so they can jointly think up a harmony.

‘The brain operates in the same units sound waves are measured in – hertz,’ said Haill. ‘You’re getting raw data from the prefrontal cortex but feeding it through software – a little bit from the left hemisphere and a little bit from the right.’

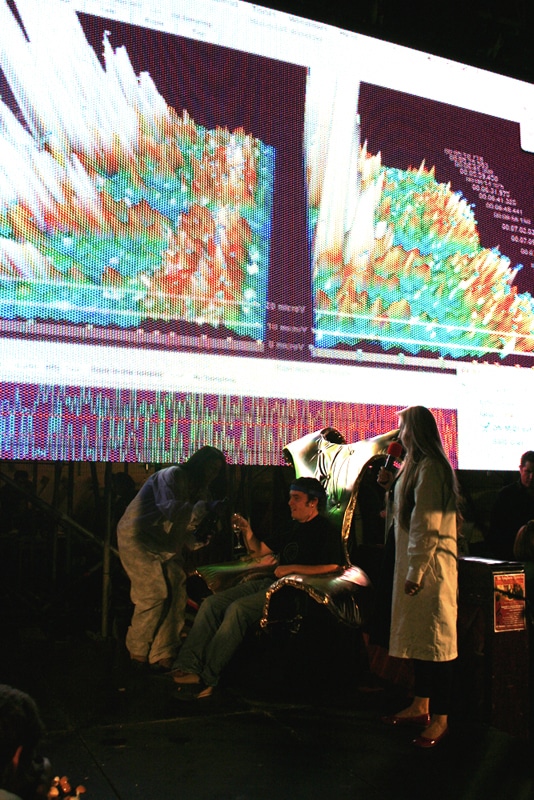

In a performance, Haill, who doubles as a consultant in neuro-linguistic programming and is an affiliate member of the Society of Applied Neuroscience, reclines on a couch and relaxes her mind. Meanwhile, her MacBook Pro records brain activity from the sweatband as spikes on a 3-D graph.

Each marker provokes ethereal notes and pitch changes that match a specific neurological state. Joined together, it’s mental music and, while tending toward the ambient, the performances sound more tuneful than many art-audio experiments. Haill likens it to ‘playing a Theramin with your brain.’

Stopping at Sheffield, Gateshead, Norwich and London until April, the Future of Sound tour is the brainchild of ’80s Brit synth-pop musicians Vince Clarke of Erasure, whose hits included ‘A Little Respect,’ and Martyn Ware of Heaven 17, best known for the track ‘Temptation.’

Other performers include the Modified Toy Orchestra, which makes music from old toys like Speak ‘n Spell, and Anna Hill, who has made a soundscape from low-frequency recordings of the aurora borealis.

‘These events always attract maverick people and Luciana is one of them,’ Ware told Wired News, ahead of a tour preview at London’s Groucho Club on Tuesday Jan. 23. ‘It’s a bit of a freak show, a mad scientist experiment. The aim is a coalescing of different cultural vapors into something solid.’

He continued: ‘I’m an old fashioned guy. I like tunes. But the average person can now create some extraordinary sounds with something like Electroplankton, for example, without really knowing that what they are using is a really sophisticated bit of software. It will be entertaining for people who are interested in the future, which is everyone.’

Haill has been using her brain-scanning hardware for the last 14 years to play MIDI with her mind, but says it is the inter-application events protocol in Apple’s OS X operating system that allows her to trigger different GarageBand samples and other devices for each type of neuro activity and for each side of the cortex.

‘I want to create something that we can all listen to even if we don’t quite understand it,’ she says. ‘I want people to understand that brain waves are changing. It’s not something you could have as a ringtone, but you could trigger a film clip, for example, when your mind is slipping into that twilight.’

Haill, who also plays ukulele and double bass, says she sometimes plays with jazz and rhythm ‘n’ blues musicians for a richer experience. Using neuro-linguistic programming techniques at Future of Sound, she will attempt to unpick the ‘neural sonic soup’ of rookie electrode wearers until, each beaming signals to the machine, they fuse to create a chilled-out melody.